|

|

|

Table of Contents

Web Application Design FeaturesFirst, we shall clear up the difference between PHP applications and common HTML pages. It is also important to define the notion of large scale web projects and how the scale depends on the number of daily visitors and the hardware resources.PHP ApplicationsBitrix Site Manager is developed in PHP scripting language and supports PHP4 and PHP5. The availability of a script execution environment in content management systems allows to create dynamic web projects, easily manage information; analyze the project effectiveness; modify the site content depending on the visitor traffic, etc. It is no doubt that all the modern web projects are developed in a programming language among which PHP is the most popular; many engineers know this language and create sites in it. Not so long ago HTML projects included only a set of static pages with HTML mark-up tags. The web server read the pages from disk and transferred them via the TCP/IP protocol. This does not require running any additional applications, thus not requiring additional memory or database. This approach is very simple and convenient but not sufficient for modern projects. PHP (which means Hypertext Preprocessor) is a widely used open source general purpose programming language. PHP has been developed especially for creating web projects; it can be injected in the HTML code. Note the difference between PHP scripts and applications written in other languages, e.g. Perl or C: we use the HTML code with some PHP commands added instead of creating a program which would build the HTML code using enormous population of API functions designed for HTML generation. The PHP code islands are marked with special initial and final tags allowing the PHP processor to find the beginning and the end of a HTML code section containing the PHP script. PHP scripts are executed on server side, which is the most important trait that makes it completely different to other scripting languages because most of them run on the client side, e.g. JavaScript. If you run a PHP script on your server, a client would only receive the result of the script execution; it would not be able to know the contents of the script. You can even configure your web server in such a way that HTML files will be processed by the PHP interpreter. Your visitors will never know whether they get plain HTML pages or the result of script execution. From administrator's point of view, it is very important that the PHP scripts are executed on server side being the interpreted language programs. The latter implies that each time you request a PHP page, the page is processed on the server by a special PHP interpreter: the language syntax, semantics and function calls are verified; and only then the code is executed. Of course, there are some tricks that we can use to avoid the repeated runs. We shall touch this issue later on. As you can see, PHP sites consume more memory per single web server instance in contrast to common HTML pages. In most cases, web server can start sending a page to a client only after it has been processed by the PHP interpreter. This slows down the page delivery period in comparison with static HTML page. DatabasesNowadays, it is almost impossible to create a more or less valuable web project without a database, which is the storage of news, forum messages, commercial catalogues, web store orders and more. Bitrix Site Manager supports the following database types.

Database is an independent client-server application running on an operation system. External applications connect to the database via TCP/IP or internal pipes; they send SQL queries and obtain the required data from the database. In most cases, database runs on the same machine as PHP and a web server. At the same time, it is also possible that the database is located on a different machine or even on a machine of another hosting provider. It is significant that there are two possible types of connection between the database and a web application:

A simple connection is established each time a page is executed at a first query to the database. This connection closes after the page is completed. A persistent connection (PHP has special functions with the *_pconnect suffix for such connections) is established only once at a first query to the database. All the subsequent queries, even from other pages, use the already open connection. Taking into account the fact that establishing a connection is a time and resource consuming process (a TCP/IP connection is created; memory buffers are allocated; authorization is performed; converters are initialized; other operations are performed), the persistent connections are more preferable if it does not exceed the limit of concurrent database connections. Note! When establishing a connection to the database, PHP usually tries to connect to the local socket (if "localhost" or "localhost:port"is specified as a server parameter) without opening a TCP/IP connection. If you prefer to use a remote connection for local addresses, use 127.0.0.1 instead of localhost. Connections without TCP/IP are more preferable in terms performance, especially when transferring large amounts of information from the database. But when a database is located on remote machine which makes the TCP/IP connection inevitable, persistent connections are even more desirable.

In Bitrix Site Manager, the connection type is specified in /bitrix/php_interface/dbconn.php.

define("DBPersistent", true);

$DBType = "mysql";

$DBHost = "localhost:31006";

$DBLogin = "root";

$DBPassword = "";

$DBName = "bsm_demo";

$DBDebug = false;

$DBDebugToFile = false;

Use the

The usage of persistent connections may require changing certain settings in Apache and MySQL. Ensure that you do not exceed maximum allowed connections. You will find more information about your database in the PHP documentation. What are large-scale projects?As mentioned above, PHP applications differ from static HTML pages in respect to server administration and preparation for the efficient performance. However, what kind of projects can we call large, and when do we need a special preparation and configuration of web systems for faster PHP code execution? A range of factors glue together making a project large scale requiring proper configuration and administration:

Practice shows that even if you follow only some of the recommendations, you will make PHP much faster. In this course, we shall conclude the required minimum configuration recommendations, and discuss Windows issues in more detail. Why do sites die?First of all, we have to thoroughly examine the main reasons of unstable web server operation or even total breakdown. A clear understanding of the reasons will allow you to select the recommendations more carefully and use the hardware resources more efficiently.Unstable systemsAny hardware, software or network system is created to withstand a certain load. We are not surprised when a car tier is tested for maximum permissible speed and sold with these indications and cautions. But we can hardly find the same attitude to software and hardware. Companies are rare to test server for maximum load and estimate maximum allowable traffic before bringing the project to the market. But the principal errors and weak points can be easily recognized during testing, what allows to increase server performance highly. We shall talk about why an ordinary web breaks because during testing or real peak loads. The possible reasons can be as follows.

Operation memory. Huge processesLet's study the question of web server memory usage in more detail. As we have mentioned above, most configurations, upon each new query, start an individual web server process with extra modules and PHP interpreter that takes 20-30 MB of memory and more. 10 started processes take 200-300 MB. At a 1 GB system, 100 processes bring to exhaustive swapping because all these processes together require 2-3 GB of memory. As practice shows, low memory can be a key factor of instability at peak loads. It also should be mentioned that in common configuration, a web server processes all requests for PHP pages, images, binary files, stylesheets and other site components. A site page can contain tens or even hundreds of images. A web server can send or receive such files. Now, bearing in mind the fact that a common web server process requires 20-30 MB, we assert that the PHP interpreter does not process static elements, but the memory is still used, and the process is busy serving requests. It turns out that a process takes 90% of time handling static documents only wasting system resources ineffectively. The static content problem is very substantial. One of the most important tasks is to minimize the number of static queries processed by the web server.

Operation memory. Slow channelsAnother aspect is the problem of slow client Internet channels, compared to the web server processing power. At first sight, this problem is not of the utmost importance. However, it can be a reason of many problems. For example, page generation can take 0.01 sec, while the page transfer time from the server to a client can be 5 to 50 sec and more, even with compression. While transferring a page to a client, a web server keeps an almost inactive Apache process in memory waiting for the data transfer completion unable to release itself and the memory to start processing incoming queries. Administrators often do not realize how this factor influences the system stability and the memory use. Let’s make a simple calculation. Consider the two systems: A and B. In the "A" system, the page creation time is 0.1 sec, and the average page transfer time is 5 sec (in the real life, this value can be higher). In the "B" system, the page creation time is 0.1 sec, and the page transfer time is zero. Assume each site receives 100 queries per second. The "A" system: processing 100 queries per second will require running 100 simultaneous individual web server processes. You may ask: "Why?" Because even if a query is processed within 0.1 sec, it turns out that our processes are not yet ready to handle other requests, and they sit in memory and are just wait for current clients to receive the page (5 sec). By the fourth second, the web server receives 100 more queries and has to start 100 more processes. Consequently, by the fifth second there will be 500 processes in memory and only from this moment on the processes of the first second will begin to terminate and free memory to process new queries. Hence, the "A" system requires running about 500 processes for adequate performance, which will require about 10 GB of memory at best. Note that even if the page generation time is 0.001 sec, it would not help to improve the system performance, because the processes wait for the period which is the duration of transfer of data to clients on slow channels rather than the page generation time. This means that the "A" system performance is not related to the performance of PHP or the system. The "B" system: a web server receives 100 requests within the first second. Only 10 processes are required to handle 100 requests. One process processes one query for 0.1 sec. As we have assumed above, the time required to transfer a page to a client is null. That is, one web server process is able to handle 10 user queries per second. By the end of the first second, all requests will be processed by only 10 processes, and by the second 2 all the processes will be ready to process the queued requests. The same situation will occur on the second 3, and in an hour. Hence, only 10 processes are required for the adequate performance of the "B" system, which takes only 200 MB of memory. It should be emphasized that reducing the page generation time to 0.01 sec allows to dramatically increase the system performance because only one process will process 100 requests per second. The "B" system performance depends solely on the performance of PHP rather than slow channels. This story clearly illustrates the negative influence of the slow client channels on the overall web system performance. The web servers consume memory ineffectively on slow channels. Running a few steps forward, we should mention that the techniques exist allowing to build a system close to the "B" model and make a web system independent on slow channels dramatically increasing the system performance and stability. A two-tier configuration: Front-End and Back-EndThe creation of a Front-End + Back-End two-tier system to process requests is the best way to eliminate the highlighted problems. Front-End is a public area of a web project which receives client requests, transfers them to Back-End and displays the content to a user. Back-End is a unit comprising the processing power of the system that executes PHP scripts, builds HTML pages and maintains the application business logic. Let’s consider an example of the two-tier configuration in more detail. Front-endWe shall start by building a front-end system and determine the purposes and tasks of this tier. NGINX, SQUID, OOPS or other similar product can be used as a front-end server. NGINX is a very compact and fast web (HTTP) server. NGINX consumes just a little memory, is able to serve requests for static files without assistance and can act as an accelerated non-cashing proxy. For example, if an image is requested, NGINX reads data from the disc and transfers the file to a client. SQUID and OOPS are classic proxy servers capable of proxying requests; they usually cache static requests by storing copies of static objects in the cache (or disc for persistent objects) during a certain period of time. We can assert that NGINX showed the best run-time results. However caching proxy servers can also produce good results. In a two-tier architecture, a front-end system is puts forward to a client: a lightweight web or proxy server that receives user requests and executes the requests that can be processed without the back-end assistance. If using NGINX or similar server, all static objects are read directly from the disc and transferred to a client. If a caching proxy server is used, a back-end is queried for static objects, images and style-sheets only once. Afterwards, these files are stored in the front-end cache according to the cache policy and transferred to clients without querying the back-end. The main goals we attempt to achieve by creating a front-end tier are:

As you can see, the front-end allows to eliminate quite a few risks and attain the most favorable conditions for the system. Note! If you intend to use a caching proxy server as a front-end, configure the caching time of documents. Images, style-sheets, XML files and other static objects are subjects of the caching policy. After the first request, files are stored in the proxy server and are transferred to clients without querying the back-end. The recommended image caching time is 3 to 5 days. The following code shows an example of the cache configuration that can be specifies in the .htaccess file of the server root: ExpiresActive on ExpiresByType image/jpeg "access plus 3 day" ExpiresByType image/gif "access plus 3 day" This example requires that a web server allows redefinition of variables in .htaccess, and the "mod_expires" module is installed. In some cases, the front-end caching policy is configured independently of the back-end configuration. These directives instruct the front-end to cache all images. Queries for the content pages will not be cached and will be directed to the back-end. Interaction with Back-endA Back-End is a common Apache web server capable of running PHP applications. The back-end still can execute queries for images and static files if the front-end uses a caching proxy server. But it is very important to minimize the number of queries to the back-end server for static files ensuring that 99% of all queries are PHP pages. Remember that the use of back-end to process static queries is extremely resource and time expensive. When configuring the back-end, you can get a significant performance gain and stabilize memory consumption. In most cases, a back-end is a common Apache web server on a non-standard port (e.g. 88) responding only to queries from localhost or the front-end IP address. Administrators should use more than one internal IP addresses like 127.0.0.2, 127.0.0.3 etc. on the port 80; otherwise, undesirable redirects to an invalid port of the front-end server may occur. Let us outline the process of interaction between the front-end and the back-end when processing a user request for a common page. Assume that a front-end accepts a user request, for example, at http://www.bitrixsoft.com on the port 80. We use NGINX as a front-end on our site. The query is accepted and relayed to a back-end (PHP-enabled Apache web server), which processes all queries at http://127.0.0.2:80/. The back-end web server executes the query by processing a PHP script and generating an HTML page to be sent to a client. The prepared HTML page is transferred then form the back-end to the front-end as a response to the user’s query; a connection between the front-end and the back-end closes (avoid using KeepAlive at the back-end); and the back-end process frees the memory or starts processing another query. The front-end transfers the ready page to the client for as long as required, even on a slow channel. Transferring the page to the client requires minimum of the front-end memory. Having received the page, the client browser sends a series of requests for images and style-sheets. All requests are received and processed by the front-end without troubling the back-end. The front-end reads all the static files directly from the disc without using the slow and expensive back-end processes. As practice shows, the two-tier Front-End + Back-End configuration reduces memory consumption to a minimum as well as the time required for request processing, and allows to give the database more memory for better performance. This configuration reduces server load significantly when processing a large amount of static files: music, software installation packages, presentations etc. Stabilize the Back-end memory consumptionEven with the two-tier configuration, it is essential to stabilize the system memory consumption irrespective of load degree and prevent server from overload. We recommend that you set the MaxClients parameter in the Apache settings to 5 to 50 to maintain better memory consumption and minimize the number of running back-end processes. By setting the MaxClients parameter, you limit the number of simultaneous back-end processes. Thus, you impose a strict limitation on memory consumption and prevent the machine from breakdown at stress loads. The MaxClients value should be derived from the system resources and load. You can use the following algorithm to find out the best value. Get the amount of memory consumed by a back-end web server process. Assume you observe the indication of 20 МB. If we set MaxClients to 5, the maximum memory allocation is 100М. It is recommended to choose 5 to 10 for a 512 MB machine. Having a Front-end properly configured to process static files ensures that the system will easily support 50000 hits a day (10 to 20 thousands unique visitors). For large scale, twin machine projects or if the server has much RAM, the MaxClients value should be derived from the stress test results. Important! You should choose the MaxClients value such that the processor load rate is 90% of processor resources at most in stress conditions, and never reach 100%. This will ensure the system will not suffer from performance regression at peak loads.

Another important parameter is the Front-end response timeout which is to be configured to keep the front-end waiting for the release of a back-end web server process if there are no spare processes. This eventually makes up a query queue protecting the back-end from overload. The back-end process management parameters should respect the MaxClients value. For example, if MaxClients is 5, the following parameters would require changing values: MinSpareServers 5 StartServers 5 MaxClients 5 These settings mean that when the back-end server starts, it will run as many web servers as client connections are possible. The processes will never terminate to be ready to accept and process a front-end request anytime. At the start-up time, the size of allocated memory is readily available which allows to assign the remaining memory to the database. Suggestion. It is a good idea to begin with a small value of MaxClients, e.g. 5 and monitor the page execution time (?show_page_exec_time=Y). If, on a fast channel, the page execution time is permanently minimum at stress load while a page loads with a significant lag, we can affirm that the current number of back-end processes is insufficient to quickly respond to the front-end requests. In this case, the MaxClients value can be increased taking the memory allocation balance into consideration. Another advantage of MaxClients is the database connection optimization.

Attention! Administrators often neglect this recommendation ignoring the limitation or setting it to unfoundedly large values. This always result in the unbalanced two-tier system and instability at peak loads.

The recommendations given in this lesson can:

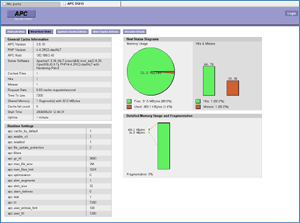

PHP PerformanceWeb servers waste up to 60% of time for repetitive pre-recompilation of PHP code for execution. A key method that would allow to reduce the processor load is using a PHP pre-compiler. Today, the following PHP pre-compilers are available on the market: Alternative PHP Cache (APC)eAccelerator Zend Performance Suite Turck MMCache PHP Accelerator AfterBurner Cache Currently, APC (Alternative PHP Cache) is the most popular and developing product. We expect to see it included in the PHP6 package. We recommend APC, or eAccelerator as a quality and proved alternative. Remember to reserve sufficient memory for compiled PHP scripts. 16 to 24 MB is usually enough, but you can increase it to 32 MB on the safe side, to account for Control Panel scripts. The memory is shared between the web server processes which means that it will be reserved only once. To reduce memory eaten by the web server processes, remove all the unused PHP modules at the compilation or run time. To boost the PHP session performance, switch from file based sessions to shared memory sessions. Compile PHP with the --with-mm option and set the session.save_handler=mm parameter in php.ini. This option is not available for Windows platforms. Page CompressionUsing compression significantly increases page transmission speed from a server to a client. A common compression ratio for text and HTML files is 50% to 70%, which seriously improves performance both on the server and client sides. A preferred point of compression application is the front-end. Use it on the back-end server if only otherwise is impossible. The following compression methods can be used:

More Two-Tier Configuration Hints

Potential ResultsThe supposed two-tier architecture and the recommendations will produce the following results.

Database OptimizationBasic PrinciplesThe database optimization is one of the most important problems you have to elaborate on to get the whole system fast and stable. You should have a clear understanding that a standard database configuration usually implies minimum hardware, memory and disk space usage. Typical configurations cannot, and never consider your specific hardware and software. You must configure your database manually to get highest possible performance. A common optimization strategy can be devises as follows.

There are not so many common recommendations on the database configuration that can be given. A range of different database types exists; each is a complex and involved software application. To fine-tune your database, read the database documentation. However, we can win a serious advantage from the creation of the two-tier configuration. The memory consumption is well balanced so we can derive the exact size of memory that we can reserve for the database. In most systems 60% to 80% of memory can be allocated for database, which allows to improve the overall performance significantly. In the next lessons we shall discuss the most common approaches to optimizing MySQL and Oracle databases. Persistent database connectionThe most important parameter that affects the amount of memory required by database (MySQL\Oracle) is the maximum number of simultaneous connections ("max_connections"). The use of a two-tier architecture can decrease the number of concurrent database connection several times less and give more memory for data sorting and buffering. If you specify the MaxClients value in the back-end web server settings, you guarantee that the maximum database connections equals the maximum number of simultaneous web client connections. Consequently, the number of connections is fixed! Choose the value of the max_connections parameter such that the system has 10% to 20% of maximum connections in reserve. In other words, set max_connections to a value 10% to 20% more than MaxClients. MySQL ConfigurationFor the MySQL version, the optimization of database interaction is one of the most important points because Bitrix Site Manager uses database operations intensively. A standard format of MySQL is MyISAM. This is a simplest format ever possible; it stores data tables and index in separate files. This format is fast and easy to use for simple sites with light traffic; however, it cannot ensure data integrity and safety due to lack of transactions. In the view of performance, the main imperfection of MyISAM is table-level locking. Apparently, MyISAM tables become the weakest point of the system preventing the back-end server from processing more client requests. Another drawback is the increase of the page execution time owing to having to wait for locked tables to release at the MySQL level. We recommend that you convert all your tables to the InnoDB format which ensures data integrity, allows transactions and row-based data locking. MySQL 4.0 and later supports InnoDB. You can switch to InnoDB tables by performing the following actions.

Changing the tables to the InnoDB format eliminates database performance bottlenecks and allows to use system resources to the full extent. Attention! Remember to configure your InnoDB. For better database performance, fine-tune the MySQL settings in my.cnf in the InnoDB configuration section “innodb_*”. Pay special attention to the following parameters: set-variable = innodb_buffer_pool_size=250M set-variable = innodb_additional_mem_pool_size=50M set-variable = innodb_file_io_threads=8 set-variable = innodb_lock_wait_timeout=50 set-variable = innodb_log_buffer_size=8M set-variable = innodb_flush_log_at_trx_commit=0 Recommendation. To reduce the server response time, use delay transactions and specify If MyISAM tables passed out of active use at your server, you can free memory in favour of InnoDB. A good idea is to reserve as much sufficient memory for the data cache as required for storing information commonly used by Bitrix Site Manager. 60% to 80% of free system memory is usually an adequate quota. Recommendation. Compile a multithreaded MySQL installation for parallel query processing and better system performance. The following example sets the recommended cache size for a 2 GB server running FreeBSD/Linux:

Bitrix Site Manager contains nearly 250 tables, which requires larger MyISAM table header cache: set-variable = key_buffer_size=16M set-variable = sort_buffer=8M set-variable = read_buffer_size=16M The parameters are used only by MyISAM. If your database does not use MyISAM tables, set these parameters to minimum values. Set the query result cache size. 32 MB is usually enough (refer to the Qcache_lowmem_prunes status). The default maximum query result size is 1 MB, but you can change it. set-variable = query_cache_size=64M set-variable = query_cache_type=1 The main buffer (

The following directive sets the size of the helper buffer for internal data structures. Larger buffer size do not improve performance.

The following lines set the log file size. Larger log file size causes less writes to the main data file. The log file size is usually proportionate to the set-variable = innodb_log_file_size=100M set-variable = innodb_log_buffer_size=16M Set the delay transaction registration. This will flush transactions once per second:

Increase the size of temporary tables to 32 MB:

Important notes. Migration to InnoDB can cause significant performance degradation due to massive write and update operations. These, in their turn, are the result of transaction-oriented architecture of InnoDB. You have to make the final decision on switching to InnoDB on your own. Attention! If you have decided to continue using MyISAM, remember to configure MySQL by increasing the cache size, sorting buffers and minimizing disk operations. Use as much memory as possible because it can speed up your project significantly.

ConclusionConclusionThe maintenance history of heavy traffic systems shows that the performance of the same system may vary extremely depending on the selected allocation of resources and configuration. For more information on the system administration and configuration, please contact the Bitrix technical support at www.bitrixsoft.com |